The Way forward for Privateness Discussion board printed a framework for biometric information rules for immersive applied sciences on Tuesday.

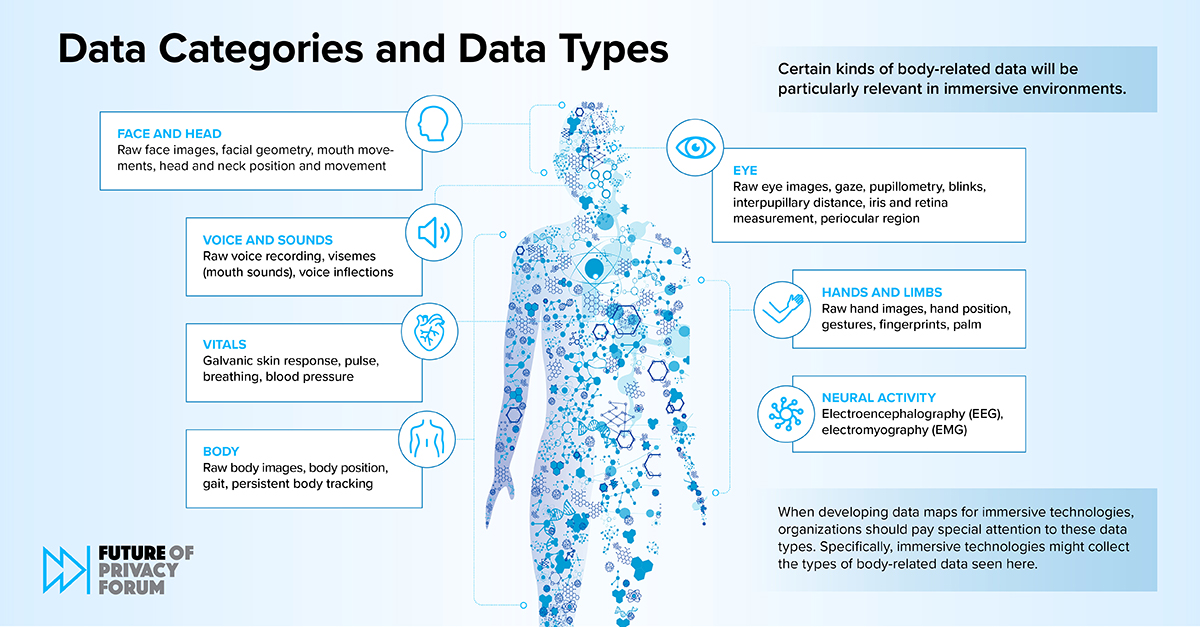

The FPF’s Danger Framework for Physique-Associated Information in Immersive Applied sciences report discusses greatest practices for amassing, utilizing, and transferring body-related information throughout entities.

#NEW: @futureofprivacy releases its ‘Danger Framework for Physique-Associated Information in Immersive Applied sciences’ by authors @spivackjameson & @DanielBerrick.

This evaluation assists organizations to make sure they’re dealing with body-related information safely & responsibly.https://t.co/FC1VOsaAFe

— Way forward for Privateness Discussion board (@futureofprivacy) December 12, 2023

Organisations, companies, and people can incorporate the FPF’s observations as suggestions and a basis for facilitating protected, accountable prolonged actuality (XR) insurance policies. This pertains to entities requiring giant quantities of biometric information in immersive applied sciences.

Moreover, these following the rules of the report can apply the framework to doc causes and methodologies for dealing with biometric information, adjust to legal guidelines and requirements, consider dangers related to privateness and security, and moral concerns when amassing information from units.

The framework applies not solely to XR-related organisations but additionally to any establishment leveraging applied sciences depending on the processing of biometrics.

Jameson Spivack, Senior Coverage Analyst, Immersive Applied sciences, and Daniel Berrick, Coverage Counsel, co-authored the report.

Your Information: Dealt with with Care

So as to perceive deal with private information, organisations should establish potential privateness dangers, guarantee compliance with legal guidelines, and implement greatest practices to spice up security and privateness, the FPF defined.

In keeping with Stage One of many framework, organisations can accomplish that by:

Creating information maps that define their information practices linked to biometric data

Documenting their use of knowledge and practices

Figuring out pertinent stakeholders, direct and third-party, affected by the organisation’s information practices

Corporations would analyse relevant authorized frameworks in Stage Two to make sure compliance. This may contain firms amassing, utilizing, or transferring “body-related information” impacted by US privateness legal guidelines.

To conform, the framework recommends that organisations “perceive the person rights and enterprise obligations” relevant to “present complete and sectoral privateness legal guidelines,” it learn.

Organisations must also analyse rising legal guidelines and rules and the way they might affect “body-based information practices.”

In Stage Three, firms, organisations, and establishments ought to establish and assess dangers to others. It defined that this consists of the people, communities, and societies they serve.

It mentioned that privateness dangers and harms may derive from information “used or dealt with particularly methods, or transferred to explicit events,” it mentioned.

It added that authorized compliance “will not be sufficient to mitigate dangers.”

So as to maximise security, firms can observe a number of steps to guard information, reminiscent of proactively figuring out and lowering dangers related to information practices.

This may contain impacts on the next:

Identifiability

Use to make key choices

Sensitivity

Companions and different third-party teams

The potential for inferences

Information retention

Information accuracy and bias

Consumer expectations and understanding

After evaluating a gaggle’s information use coverage, organisations can assess the equity and ethics behind its information practices, based mostly on recognized dangers, it defined.

Lastly, the FPF framework really helpful the implementation of greatest practices in Stage 4, which concerned a “variety of authorized, technical, and coverage safeguards organisations can use.

It added this is able to assist organisations preserve up to date with “statutory and regulatory compliance, decrease privateness dangers, and be certain that immersive applied sciences are used pretty, ethically, and responsibly.”

The framework recommends that organisations deliberately implement greatest practices by comprehensively “touching all elements of the information lifecycle and addressing all related dangers.”

Organisations may also collaboratively implement greatest practices utilizing these “developed in session with multidisciplinary groups inside a company.”

These would contain authorized product, engineering, belief, security, and privacy-related stakeholders.

Organisations can shield their information by:

Localising and processing information on units and storage

Minimising information footprints

Regulating or implementing third-party administration

Providing significant discover and consent

Preserving information integrity

Offering person controls

Incorporating privacy-enhancing applied sciences

Following these greatest practices, organisations may consider greatest practices and suitably align them as a coherent technique. Afterwards, they might assess the perfect practices on an ongoing foundation to keep up efficacy.

EU Proceeds with Synthetic Intelligence (AI) Act

The information comes proper after the European Union moved ahead with its AI Act, which the FPF states can have a “broad extraterritorial affect.”

Presently beneath negotiations with member-states, the laws goals to guard residents from dangerous and unethical use of AI-based options.

Political settlement was reached on the EU’s #AIAct, which can have a broad extraterritorial affect. If you want to achieve insights into key authorized implications of the regulation, be a part of @kate_deme for an in-depth FPF coaching tomorrow at 11 am ET.: https://t.co/weVgDdsvRh

— Way forward for Privateness Discussion board (@futureofprivacy) December 11, 2023

The organisation is providing steering, experience, and coaching for firms after the Act prepares to enter drive. This has led to one of many largest adjustments in information privateness coverage for the reason that introduction of the Basic Information Safety Regulation (GDPR) in Might 2016.

The European Fee acknowledged it needs to “regulate synthetic intelligence (AI)” to make sure improved circumstances for utilizing and rolling out the expertise.

It mentioned in an announcement,

“In April 2021, the European Fee proposed the primary EU regulatory framework for AI. It says that AI techniques that can be utilized in numerous purposes are analysed and categorized in keeping with the chance they pose to customers. The completely different danger ranges will imply roughly regulation. As soon as accepted, these would be the world’s first guidelines on AI”

In keeping with the Fee, it goals to approve the Act by the tip of the yr.

Biden-Harris Govt Order on AI

In late October, the Biden-Harris administration applied an govt order on the regulation of AI. The Authorities’s Govt Order on Secure, Safe, and Reliable Synthetic Intelligence goals to safeguard residents around the globe from the dangerous results of AI programmes.

Enterprises, organisations, and consultants might want to adjust to the brand new rules for “builders of probably the most highly effective AI techniques” to share their security assessments with the US Authorities.

Responding to the Plan, the FPF mentioned it was “extremely complete” and provided a “entire of presidency method and with an affect past authorities companies.”

It continued in its official assertion,

“Though the manager order focuses on the federal government’s use of AI, the affect on the non-public sector will probably be profound as a result of intensive necessities for presidency distributors, employee surveillance, training and housing priorities, the event of requirements to conduct danger assessments and mitigate bias, the investments in privateness enhancing applied sciences, and extra”

The assertion additionally referred to as on lawmakers to implement “bipartisan privateness laws.” Doing so was “a very powerful precursor for protections for AI that affect susceptible populations.”

UK Hosts AI Safety Summit

Moreover, the UK additionally hosted its AI Safety Summit on the iconic Bletchley Park, the place world-renowned scientist Alan Turing cracked the Nazi’s World Warfare II-era Enigma cryptography.

On the world-class occasion, a few of the business’s top-level consultants, executives, firms, and organisations gathered to stipulate protections to control AI.

This has included the US, UK, EU, and UN governments, the Alan Turing Institute, The Way forward for Life Institute, Tesla, OpenAI, and plenty of others. The teams mentioned strategies to create a shared understanding of the dangers of AI, collaborate on greatest practices, and develop a framework for AI security analysis.

The Combat for Information Rights

The information comes as a number of organisations enter recent alliances with a purpose to deal with ongoing considerations over using digital, augmented, and combined actuality (VR/AR/MR), AI, and different rising applied sciences.

For instance, Meta Platforms and IBM launched a large alliance united to develop greatest practices for synthetic intelligence, biometric information, and to assist create regulatory frameworks for tech firms worldwide.

The World AI Alliance hosts greater than 30 organisations, firms, and people from throughout the worldwide tech neighborhood and consists of tech giants reminiscent of AMD, HuggingFace, CERN, The Linux Basis, and others.

Moreover, organisations just like the Washington, DC-based XR Affiliation, Europe’s XR4Europe alliance, the globally-recognised Metaverse Requirements Discussion board, and the Gatherverse, amongst others, have contributed enormously to the implementation of greatest practices for these concerned in constructing the way forward for spatial applied sciences.