Gemini, the generative AI chatbot from Google, will refuse to reply questions concerning the upcoming U.S. elections. The tech big stated on Tuesday that it was extending a restriction from an earlier experiment surrounding elections in India, with Reuters reporting that the ban will prolong globally.

“On preparation for the numerous elections taking place world wide in 2024 and out of an abundance of warning, we’re limiting the varieties of election-related queries for which Gemini will return responses,” a Google spokesperson informed Decrypt—stating that the restrictions had been first introduced in December.

“The December weblog additionally highlights who we’re working with within the US,” the spokesperson added.

Heading right into a heated 2024 election season, AI builders like OpenAI, Anthropic, and Google have got down to curb misinformation utilizing their platforms, however Gemini’s refusal to reply even primary questions—such because the date of the US presidential election—is a brand new stage of moderation.

“Supporting elections is a vital a part of Google’s accountability to our customers and the democratic course of,” the corporate stated. “Defending the integrity of elections additionally means protecting our services protected from abuse.

“Throughout Google, we have now long-standing insurance policies to maintain our merchandise and platforms protected,” the assertion continued. “Our insurance policies are enforced constantly and apply to all customers, no matter content material sort.”

When requested questions concerning the upcoming elections, Gemini responds with, “I’m nonetheless studying learn how to reply this query. Within the meantime, attempt Google Search.”

On the subject of election day, Google search gives an easy reply: Tuesday, November 5, 2024.

Google didn’t instantly reply to Decrypt’s request for remark.

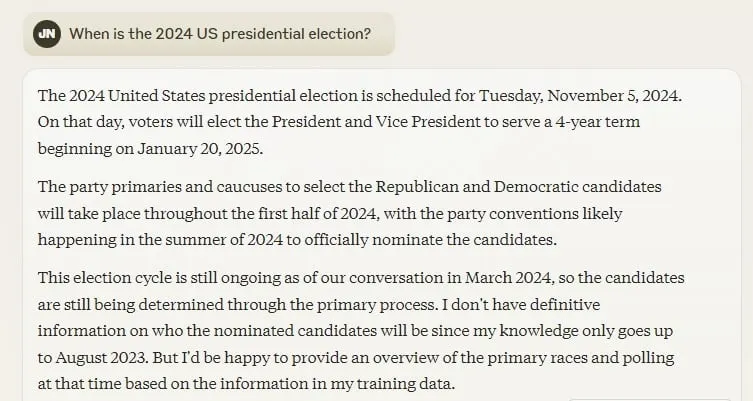

Asking Gemini rival OpenAI’s ChatGPT the identical query. GPT-4 responds with, “The 2024 United States presidential election is scheduled for Tuesday, November 5, 2024.”

OpenAI declined Decrypt’s request for remark, as a substitute pointing to a January weblog put up concerning the firm’s strategy to approaching elections world wide.

“We count on and purpose for folks to make use of our instruments safely and responsibly, and elections aren’t any totally different,” OpenAI stated. “We work to anticipate and stop related abuse—comparable to deceptive “deepfakes,” scaled affect operations, or chatbots impersonating candidates.”

For its half, Anthropic has publicly declared Claude AI off-limits to political candidates. Nonetheless, Claude is not going to solely let you know the election date however spotlight different election-related data.

“We don’t enable candidates to make use of Claude to construct chatbots that may fake to be them, and we don’t enable anybody to make use of Claude for focused political campaigns,” Anthropic stated final month. “We’ve additionally educated and deployed automated programs to detect and stop misuse like misinformation or affect operations.”

Anthropic stated violating the corporate’s election restrictions may consequence within the person’s account being suspended.

“As a result of generative AI programs are comparatively new, we’re taking a cautious strategy to how our programs can be utilized in politics,” Anthropic stated.

Edited by Ryan Ozawa.